There’s no question that artificial intelligence is a tool with enormous potential. Because it can process information far faster than humans—and can manage vast quantities of data—it’s slowly but surely becoming a part of medicine in the United States and around the world. Here, we’ll review some of the ways AI could benefit ophthalmology; some of the research already underway; and potential pitfalls that are now becoming clear as the technology evolves.

AI and “Deep Learning”

In the realm of artificial intelligence in medicine, the process referred to as “deep learning” is of particular interest. Dimitri Azar, MD, MBA, distinguished professor of ophthalmology at the University of Illinois College of Medicine in Chicago and senior director of ophthalmic innovations at Alphabet Verily Life Sciences, explains that, in the case of image analysis, deep learning involves constantly refining the weighting, or relative importance, of details in the images as system learning progresses. “These programs allocate a certain weight for criteria that they discover in these images,” he says. “With each new image the weights are modified to accommodate the new information, and the process keeps repeating. Humans can do this type of analysis at a smaller scale, but when the amount of data soars, we need help from AI.”

Potential areas in ophthalmology that might eventually benefit from this process include:

• corneal topography;

• the interpretation of fundus photography and OCT scans;

• IOL power prediction;

• screening for and diagnosing eye problems such as diabetic retinopathy, glaucoma, macular degeneration and dry eye;

• predicting the speed of progression of diseases such as macular degeneration and glaucoma;

• predicting treatment outcomes; and

• predicting the likelihood of needing treatment in the future.

At a more general level, AI may:

• help us capture photographs and scans more accurately;

• act as an automatic scribe;

• predict future medical crises that a patient may face;

• improve patient flow;

• analyze and sort data so that the physician has to view only the most relevant information; and

• help to discover new associations between diseases and the characteristics of a patient’s eye.

Current Research: A Sampling

Here are just a few recent developments involving AI:

• IDx-DR (IDx Technologies) has become the first FDA-approved device that can make a screening decision without clinician input. Data from a prospective clinical trial of the IDx-DR system, involving 900 subjects with diabetes at 10 primary care sites across the United States, were recently published. The system exceeded all prespecified superiority endpoints with 87-percent sensitivity, 90-percent specificity, and a 96-percent imageability rate.1

• Philippe M. Burlina, PhD, and colleagues have used two deep-learning algorithms to categorize more than 130,000 fundus images as falling into one of two categories: disease-free/early macular degeneration, or intermediate/advanced macular degeneration. The algorithms’ accuracy ranged from 88.4 to 91.6 percent.2

• A research group used a deep-learning system to detect severe nonproliferative diabetic retinopathy and proliferative diabetic retinopathy—as well as macular degeneration and glaucoma.3 A validation data set of 71,896 images revealed a sensitivity of 90.5 percent and specificity of 91.6 percent for referable diabetic retinopathy; a sensitivity of 96.4 percent and specificity of 87.2 percent for possible glaucoma; and a sensitivity of 93.2 percent and specificity of 88.7 for macular degeneration.

• Researchers in Japan used a deep-learning algorithm to detect rhegmatogenous retinal detachment in Optos ultra-widefield fundus images. It demonstrated a sensitivity of 97.6 percent and specificity of 96.5 percent.4 The same research group conducted another study using Optos ultra-widefield fundus images to detect neovascular macular degeneration; the sensitivity was 100 percent; specificity was 97.31 percent.5

• Researchers in Germany trained a deep convolutional artificial neural network to predict patients’ need for anti-VEGF injections, based on central retinal OCT scans and their intravitreal injection records. When applied to a validation data set after training, the algorithm showed a prediction accuracy of 95.5 percent, with a sensitivity of 90.1 percent and specificity of 96.2 percent.6

|

The quantity of published research reveals a steady increase in interest in AI. |

• Researchers in Vienna evaluated the potential of “random forest” machine learning to predict best-corrected visual acuity outcomes in patients receiving ranibizumab therapy for neovascular AMD, using structural and functional assessments during the initiation phase. The model’s accuracy increased each month; at month three, final visual acuity outcomes could be predicted with an accuracy of R2=0.7.7

• The same team conducted a pilot study to see if machine learning could predict the frequency of anti-VEGF injection requirements in neovascular AMD patients, using OCT images acquired during the initiation phase. The algorithm’s accuracy for predicting low need for treatment was AUC=0.7; its accuracy for predicting high treatment need was AUC=0.77.8

Despite the obvious promise of this technology, potential problems are also on the horizon. Now that AI screening is a reality, concerns include: Will a “clean” report from an AI screening lull people into a false sense of security about their overall eye health? Will over-reliance on technology cause doctors’ skills to atrophy due to lack of use? Will AI cause technicians and physicians to lose their jobs? And perhaps most significant: Will imperfect technology cause patients to suffer harm due to a missed diagnosis or incorrect prediction?

Here, several experts address these and other questions.

The Benefits of AI

Pradeep S. Prasad, MD, associate professor of ophthalmology at the Jules Stein Eye Institute, David Geffen School of Medicine at UCLA, and chief of the Division of Ophthalmology at Harbor-UCLA Medical Center, sees AI as having tremendous potential to help manage patients in a world of limited resources. “Part of my job here at UCLA is to oversee one of our county hospitals—Harbor-UCLA Medical Center, which takes care of thousands of patients,” he explains. “One of the challenges we face is a very large number of diabetic patients, many of whom have very advanced stages of disease. As a result, the volume of patients we need to screen for vision-related complications of diabetes is very high.

“In the past, those patients would have been referred to the eye clinic, but the clinic only has so much capacity,” he continues. “So, we did what a lot of health systems are doing—we implemented a teleretinal screening program. Ours is based in the primary care setting, where fundus photos are taken of patients who are diabetic. Those images are then uploaded to a server; within a day or two, trained image readers review the images and grade them for two things: whether or not the patient has diabetic retinopathy and needs to be referred to an ophthalmologist for further evaluation, and whether there’s evidence of other nondiabetic disease, such as glaucoma or cataract.”

Dr. Prasad says this system has resulted in a significant increase in the number of patients who are screened. “The problem is, there’s still a huge volume of patients for us to see,” he says. “So, we’ve implemented a second-tier screening program, in which we do further imaging with wide-angle fundus photography and OCT. These two tests give us more information than we can acquire in the primary care setting. The result is that we can further pare down the number of patients who actually have to see an ophthalmologist. This has been very effective at decreasing the number of patients coming in who don’t really need to be seen.”

|

| AI has shown significant skill at analyzing images, something still largely done by human readers (above). Some surgeons are not impressed by AI’s comparable accuracy, but the immediate turnaround time provided by AI is a significant practical advantage. |

Dr. Prasad notes that AI has the potential to improve the current system in at least three ways. “The first way AI can help is in terms of time efficiency,” he says. “Right now, after an image of the retina is acquired, it’s sent to a server. At some point, usually within 24 to 48 hours, the image is read and a recommendation goes out. The problem is, by that time the patient has left the primary care setting. Of course, there are other opportunities to educate the patient about the disease later on, but it’s very powerful to be able to educate the patient and use the findings as a motivating factor immediately after the image is captured.

“The second way AI may help is in terms of cost,” he continues. “It’s expensive to hire people to read these images. We have limited resources, and we want to use our resources as effectively as possible. AI can get accurate readings in a cost-efficient way.

“The third potential advantage of AI is accuracy,” he says. “There’s a lot of evidence that AI can grade images as accurately—and potentially more accurately—than human graders, and do it quickly. For example, one criterion for moderate or intermediate-stage nonproliferative diabetic retinopathy might involve counting the number of hemorrhages, or other features in the image that would imply different stages of disease. If that’s done by a human being it can be very time-consuming and potentially not as accurate as if a machine does the counting.”

Dr. Prasad says the other thing he finds exciting about AI is the possibility that it may uncover associations between disease and detectable characteristics in the eye—signs that doctors are currently unaware of. “Perhaps the software will detect a link between disease and vessel tortuosity, or ratios of vessel diameters, or some other features we’re not aware of,” he explains. “Maybe it will find a link to the location or distribution of pathology and be able to correlate that with the patient’s risk going forward. It’s really exciting that AI may not only give us information about the patient’s current disease state, but also may give us some insight into what else is going on, including what a patient’s risk is going forward and what benchmarks a patient must achieve to reduce his risk.”

Potential Downsides

Despite the possible benefits, many doctors worry about possible negative developments that could occur:

• AI will miss things it’s not looking for, giving patients a false sense of security. “This is true of any screening program, including the non-machine-based teleretinal screening-based programs,” Dr. Prasad points out. “It’s not necessarily inherent to machine learning.”

Dr. Azar says he’s not worried about this. “The condition a diabetic patient is likely to have is diabetic retinopathy, so it makes sense to screen for that,” he says. “Furthermore, the programs under development at places like Google are able to recognize many more diseases than just diabetic retinopathy. We have to start somewhere, and it makes sense to start with diabetic retinopathy because it’s the lowest-hanging fruit. But the field will not stop at diabetic retinopathy screening.”

• A machine will miss things that a human reader would notice. “That has to do with training,” Dr. Prasad points out. “If you’re only asking the machine to look for certain things, then it will only look for those things. If the machine overlooks something, I’d say that’s not the fault of the machine—it’s the fault of the person who trained the machine.”

• AI could result in people losing their jobs as machines do those same jobs faster and more accurately. Rich Caruana, PhD, is a principal research scientist at Microsoft Research; he’s been involved with AI for 30 years and deep neural nets for five or 10 years. Although he’s concerned about current limitations of AI in health care (more on that below), Dr. Caruana foresees it eventually becoming accurate enough to make some professions outmoded.

“Because deep learning works so well on images, some deep learning researchers suspect that one of the first specialties that will disappear in medicine will be image reading in radiology or diabetic retinopathy,” he says. “For that reason, I wouldn’t recommend that my kid go into radiology right now. Somewhere between five and 20 years from now, you’ll want your radiogram read by a computer, not by a human. The computer will be more accurate.”

Dr. Prasad points out that such changes are part of progress. “The industrial revolution changed the human economic landscape, too,” he points out. “New technology appears and peoples’ jobs change. What they thought was part of their job is no longer there, so they find other things to do. I don’t think people should look at it as a threat; I think they should look at it as an opportunity.”

Dr. Prasad adds that no artificial intelligence is going to eliminate the need for doctors. “There will always be opportunities for human beings to take care of patients,” he says. “It’s a field that’s very much based on human interpersonal interaction. You can give a patient a lot of information, but unless you have a trusting relationship and a dialogue between the provider and the patient, the implementation of a treatment plan will probably falter.”

“Doctors will never be replaced by AI technology,” agrees Dr. Azar. “I believe there will be a greater emphasis on the humanistic elements of medicine to complement the inevitable use of data analytics and computers in medicine. For that reason there will be a greater need to educate medical students, residents and fellows in the art and science of compassionate patient care.”

“I envision a health-care system in the future where I don’t have to spend my time reviewing images,” adds Dr. Prasad. “I can spend more of my time talking to my patients and explaining to them what the information means and what they can do to improve their health. I think that’s a much more useful way to spend my day than doing something a machine could do.”

• AI could cause surgeons’ skills to atrophy as machines take over more responsibilities. Dr. Azar says he’s not concerned about these changes causing surgeons’ skills to atrophy. “We’ll just depend on computers to do the tasks that they can perform better than us,” he says. “Meanwhile, this will give us more time to focus on doing the things that computers can’t do. The health-care system will become more efficient, and doctors will enjoy taking care of patients who really need their help. Instead of replacing ophthalmologists, AI will make their work more relevant.”

The Importance of Intelligibility

Dr. Caruana is a proponent of so-called “intelligible machine learning,” which eliminates some of the downsides of the “black box” approach. “Intelligible machine learning refers to systems that are able to describe what they’ve learned,” he explains. “I think that’s very important for health care. When we began this work seven or eight years ago, very few people were concerned about understanding what these systems were learning. Now, lots of research is being done in this area.”

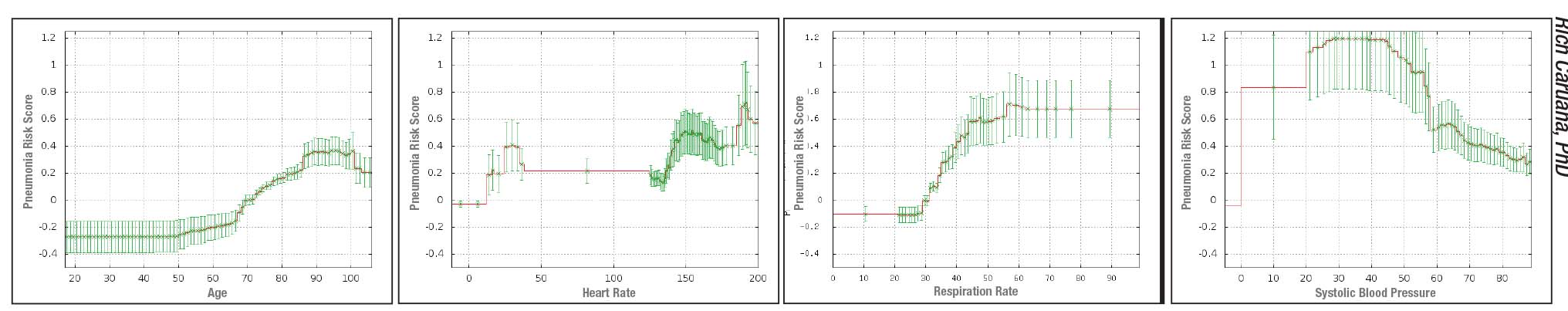

|

| Above: Sample graphs produced by an intelligible deep learning system designed to predict the probability of death from pneumonia, revealing the corrrelations it’s found between pneumonia outcomes in its learning data set and 46 features such as age, blood pressure, blood sugar level, and having asthma or heart disease. The model also produces 10 pairwise interaction graphs (see examples, facing page). These are all intended to be used by a doctor as extra information to help assess patient risk and need for hospitalization. |

Dr. Caruana says his interest in this began back when he was a graduate student at Carnegie Mellon in the mid-1990s. “I was asked to train a neural net to look at data from patients diagnosed as having pneumonia to figure out which patients should be put in the hospital,” he says. “Pneumonia is serious; five to 10 percent of those who get pneumonia die from it. But if you’re an otherwise healthy individual, it’s actually safer for you to be treated as an outpatient because of the possibility of picking up another infection in the hospital.

“The neural net I trained turned out to be very accurate,” he says. “But when I was asked whether we should use the program on real patients, I said no. Even though the model appeared to be very accurate, it was a ‘black box’; we didn’t understand how it was making its predictions. That meant that there could be problems in there we didn’t know about.

“My fears were based on experience,” he continues. “A colleague of mine trained a machine learning model to do the same thing using the same data set; his system concluded that your risk of dying from pneumonia was smaller if you had asthma! We knew that wasn’t right. Later, when we attended a project meeting with MDs present, they confirmed that asthma is, in fact, a serious risk factor if a patient develops pneumonia. But they also explained why the program might have come to the opposite conclusion. They pointed out that asthmatics pay attention to how they’re breathing, and they probably have a doctor who is treating them. As soon as these individuals sense something is wrong they go to see the doctor. They get a proper diagnosis pretty quickly, and because they have asthma, they get high-quality, aggressive treatment. The result is that the asthmatics in the data set looked like they had less chance of dying—but only because they got to a doctor faster and received aggressive treatment when they got there.

“A machine learning model doesn’t understand this at all,” he points out. “It just sees that the people with a history of asthma have a smaller chance of dying. It doesn’t know why. And because of that, it’s happy to go ahead and predict that asthmatics are lower-risk. That means that if we use this model to try to decide which patients diagnosed with pneumonia should get rapid attention or be put in the hospital or receive more aggressive treatment, we could be putting asthmatics at risk. We’d be denying them the sort of rapid, aggressive treatment that, in fact, made them look like they were at lower risk in the data set.”

Dr. Caruana notes that this particular problem could probably be fixed by altering the program. “What really worried me,” he says, “was what else the neural net might have learned that we couldn’t see. The real problem is the ‘unknown unknowns.’ I can’t fix a problem if I don’t know it’s there, and the neural net at that point was a ‘black box.’

“Unfortunately, today’s really accurate learning methods tend not to be very intelligible,” he says. “So, I’ve been working for the past seven years or so on developing intelligible machine learning methods that wouldn’t come with reduced accuracy. Thanks to a lot of hard work, our current intelligible model is just as accurate as the neural net. Now we’ve been able to apply it to the same data set, to see what it learned. It did indeed learn that asthma is good for pneumonia patients. It also learned that heart disease and chest pain and being more than 100 years old are good for pneumonia patients! In fact, it concluded that the most protective thing of all is having had a heart attack within the past few years. The explanation was the same: If you’ve had a heart attack, you waste no time getting help when you sense a problem exists.

“Now, with our new intelligible systems, we’re able to see things like this in the model and fix them before we deploy them,” he says. “This is why I believe intelligibility is critical if we’re going to use these models in health care.”

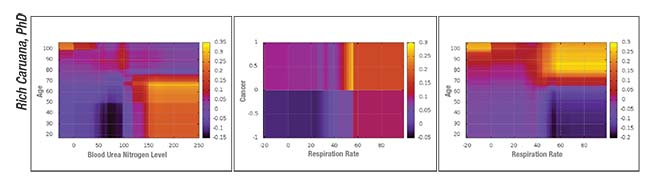

|

| Sample pairwise interaction graphs produced by an intelligible deep learning system designed to predict the probability of death from pneumonia. |

Other Potential Problems

In addition to the problems inherent in the “black box” approach, Dr. Caruana points out a number of other potential problems that need to be accounted for before AI comes into widespread use in health care:

• Testing accuracy on similar data sets hides potential problems. “Even though a machine learning system may be trained on one set of data and tested on another, both data sets usually look very similar,” notes Dr. Caruana. “That means that the same biases that appear in the training data—where, for example, it’s true that pneumonia patients with heart disease have half the probability of dying because they get care so quickly—are also true in the test data. As a result, the model is rewarded for predicting these things. It looks like the system has super-high accuracy, partly because the incorrect things it’s learned are true in both data sets. But if you were to deploy that model in the clinic to intervene in health care, it would actually hurt certain patients.

“The bottom line is: Accuracy in the real world is not the same as accuracy in the lab,” he says. “Unfortunately, the decision to release a model for use in the clinic is often based on its accuracy in the lab.”

• You can’t fix a problem you don’t know about. “We’re all smart people,” notes Dr. Caruana. “Once we find a problem we can fix it. But if we don’t know about the problem, it won’t get fixed. In some situations, a few problems in a machine learning system won’t hurt anyone; it might just be a little less efficient. But in health care, you’re talking about people’s lives. We have to put in a little more effort and be a little more cautious.

“With ‘black box’ machine learning there’s an awful lot you don’t know,” he continues. “You don’t really know what was in the data, and you don’t know what the model learned. All you know is that the model seems to be accurate on test data—which looks a lot like the training data. That may be OK in some settings. However, in certain applications—like autonomous vehicle navigation or a nuclear power plant or health care for humans—you need to worry about these things. That’s why we’re trying to educate the community that there’s more going on here than people are normally aware of. We need to have some extra cautions and test procedures—and we need intelligibility.”

What about a system that’s just been trained to look for specific things? “Problems could occur there as well,” he says. “The data sets used to train the machines are always more complicated than we think. The system will learn from everything in the data that has a signal. Because of that, it’s probably learned some things that are not appropriate.”

• Data sets are imperfect. Dr. Caruana points out a key problem with machine learning models: There are always imperfections in the data set the system learns from. “Data sets are always imperfect because of things they include or omit,” he says. “There are many ways for a machine learning model to learn things that are wrong and could be harmful for patients.

“For example, I heard about a radiology data set in which the system was trying to predict whether a breast cancer lump was a tumor,” he continues. “In addition to learning what tumors look like, it also learned to recognize a tumor-size-measuring tool that surgeons would sometimes leave near the tumor while the image was taken. The tool would only have been used if the surgeon thought this really was a tumor, so the tool in the image was sure-fire evidence that this was cancer. The system learned that, and the accuracy of its predictions increased—but for a reason that was basically irrelevant.”

Dr. Caruana adds that image analysis can be confused by parts of an image that wouldn’t faze a human. “There are systems that are really good at recognizing an object like a cow standing by a barn on grass in a natural image,” he says. “However, it may not recognize the same cow standing by water, because the system is taking the background into account. Deep learning systems may end up drawing false conclusions based on information we wouldn’t want them to include. In health care this could be lethal.”

| How Much of This is Hype? | ||

|

• Intelligible machine learning technology doesn’t help with image-based analysis. Dr. Caruana notes that, unfortunately, an intelligible system that’s able to tell you what it has learned isn’t very helpful with image analysis. “Ophthalmology is very heavy on imaging,” he points out. “Unfortunately, the learning method I’ve been working on is not yet designed to work on images, because pointing out a correlation in an image isn’t helpful the way pointing out a correlation to age or blood pressure or diabetes is. The system might say the patient’s risk is higher if pixel 433 is a little red, and pixel 7,000 is black and white, and pixel 12 has a value greater than 7. Even if that was accurate, it wouldn’t be useful. So the advantages we gain from intelligibility, such as catching bogus things the machine has learned, can’t be used on systems that evaluate images—at least so far. When those systems make a mistake, the

reason won’t be easy for us to uncover.”

• A machine learning system will only be reliable on a population exactly like the one it learned from. Dr. Caruana notes that a system is sometimes unintentionally used on a different population from the one on which it was trained. “One model was trained to predict the likelihood of 30-day hospital readmission, using data from multiple hospitals,” he says. “The data looked very good. It was then released to a much larger set of hospitals, including—by accident—a few children’s hospitals. The system had not been trained on data from children, so its accuracy was suddenly not as good. Fortunately, this was not a critical prediction, so a mistake wasn’t likely to injure patients. But it’s inappropriate for a model to be used on a population that differs in key ways from the learning data set.”

• When the input to the system evolves, the learning has to be redone. “One system was trained to read MRIs, and it did well,” says Dr. Caruana. “Then it was used on a new MRI machine that had higher resolution and lower noise, which doctors were very happy about. However, the system was suddenly making less-accurate predictions, simply because the system wasn’t trained on that level of data.

“These are the kinds of risks I’m worried about,” he says. “Many people in the health-care community think these systems are really accurate—maybe more accurate than humans—so health care would be best served by getting them out there as fast as possible. I think they don’t realize the risks, because they can’t see the little land mines hidden inside their machine learning model.

Nevertheless …

Dr. Caruana admits that even an imperfect system could still be worth using. “A lot of what the models learn is wonderful,” he notes. “Doctors are sometimes intrigued by connections the systems uncover. They say, ‘Oh, that’s interesting. I’ll bet that’s true. Maybe we should change what we’re doing because of that.’ Or they say, ‘We all believe this is true, but we’ve never seen it in data before.’

“Furthermore, if a system has been clinically tested, it might be as good, or better than, other methods for detecting disease,” he continues. “That might be true even if it does make a few mistakes. If an AI tool on my smartphone lets me check skin lesions or moles every week to look for skin cancer, that could be an incredibly valuable addition to diagnosis, even if it’s not as good as the best human doctors. If it causes people with a problem to get to a specialist sooner, that’s great. But again—if it occasionally makes a mistake with certain skin types or some kinds of skin anomalies because of bogus ‘rules’ it learned, someone could end up paying a high price.”

Dr. Caruana offers two pieces of advice to ensure the eventual success of AI in health care. “First, we need to proceed more cautiously,” he says. “A bad outcome could set back the field. We want to make sure there’s no ‘AI winter’ because a model gets deployed too quickly and recklessly and hurts patients. In addition to the injured patients, it might make people afraid of this type of technology.

“Second, when we deploy an AI learning system, we have to make sure that we have tracking and reporting, just like we have for drugs and procedures,” he continues. “That way, if the model suddenly makes a big mistake, we’ll recognize it as quickly as possible and pull the plug on it. Or, if the world changes over time and the model is no longer accurate, we’ll recognize that it’s not working as it was.

“No technology is perfect,” agrees Dr. Prasad. “Even after AI is deployed on a larger scale, there’s still going to be a period where we learn and refine. That’s actually going to be the fun part. We’ll see how we can use it in ways that we can only imagine right now.”

Dr. Caruana believes that an ideal AI system in the future will benefit from both machine and human input. “Doctors and AI systems will make different kinds of mistakes, so we’ll probably have the highest accuracy if we get the best of both of them,” he says. “The important thing is, there’s no doubt that artificial intelligence and machine learning will be great additions to health care. Like anything else, it’s a technology that can be tamed and improved and used safely. Ultimately, it will make health care better, producing more accurate and faster diagnoses and making care more cost-effective.”

“In my mind, the benefits outweigh the risks, by far,” adds Dr. Azar. “I think AI will become a future foundation of medical care.” REVIEW

Dr. Azar is an employee of Alphabet Verily and is on the boards of Novartis and Verb Surgical, a robotics venture undertaken jointly by Johnson & Johnson and Google. Drs. Prasad, Caruana and Charles report no financial ties to any products discussed in the article.

1. Abramoff MD, Lavin PT, et al. Pivotal Trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digital Medicine 2018;1:39.

2. Burlina PM, Joshi N, Pekala M, Pacheco KD, Freund DE, Bressler NM. Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. 2017;135:1170-1176.

3. Ting DSW, Cheung CY, Lim G, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318:2211-2223.

4. Ohsugi H, Tabuchi H, Enno H, Ishitobi N. Accuracy of deep learning, a machine-learning technology, using ultra-wide- field fundus ophthalmoscopy for detecting rhegmatogenous retinal detachment. Sci Rep 2017;7:9425.

5. Matsuba S, Tabuchi H, Ohsugi H, et al. Accuracy of ultra- widefield fundus ophthalmoscopy-assisted deep learning, a machine-learning technology, for detecting age-related macular degeneration. Int Ophthalmol. 2018 May 9. [epub ahead of print]

6. Prahs P, Radeck V, Mayer C, et al. OCT-based deep learning algorithm for the evaluation of treatment indication with anti- vascular endothelial growth factor medications. Graefes Arch Clin Exp Ophthalmol. 2018;256:91-98.

7. Schmidt-Erfurth U, Bogunovic H, Sadeghipour A, et al. Machine learning to analyze the prognostic value of current imaging biomarkers in neovascular age-related macular degeneration. Ophthalmol Retina 2018;2:1:24-30.

8. Bogunovic H, Waldstein SM, et al. Prediction of anti-VEGF treatment requirements in neovascular AMD using a machine learning approach. Invest Ophthalmol Vis Sci 2017;58:3240-3248.