Artificial intelligence is well-suited to ophthalmology, where data- and image-heavy subspecialties easily supply the large quantities of data required for training neural networks. Now, for the first time in any medical field, fully-autonomous AIs have come to primary care settings to diagnose diabetic eye diseases. Experts say these AI systems will help to identify many more patients in danger of losing vision, and with more sensitivity than a retinal specialist. But despite these recent breakthroughs, AI developers say you won’t be out of a job just yet.

In this article, we’ll cover some of the nuances of AI and take a look at the two autonomous ophthalmic AI systems currently available.

Send in the Bots

Experts predict that AI will play a major role in the early detection of diseases like diabetic retinopathy and diabetic macular edema. In 2019 alone, approximately 463 million adults were living with diabetes around the world, and this number is expected to grow to an estimated 700 million by 2045.1 Almost 80 percent of those with diabetes live in less-advantaged countries, where access to medical care—and to ophthalmologists in particular—may be limited. With an epidemic of these proportions, scaling up screening measures with AI technology can alleviate some of the burden.

Placed in a primary care setting or endocrinologist’s office, AI for diabetes can improve patient outcomes and lower direct patient costs.2 During their annual checkups, those with diabetes are recommended to undergo a diabetic eye exam by a general ophthalmologist or retinal specialist. However, Michael D. Abràmoff, MD, PhD, the developer of the IDx-DR and a professor of retina research at the University of Iowa Hospitals & Clinics, points out that the most recent studies show that only 15 percent of people with Medicare insurance get this annual diabetic eye exam. “We also know that this noncompliance results in preventable vision loss or blindness. AI can help by performing the eye exam during the same primary-care visit,” he says.

This type of triage by AI is not only more convenient for the patient, but would also have major ripple effects throughout the health-care system. As an example, Dr. Abràmoff recalls his time in New Orleans, introducing an AI system in the wake of Hurricane Katrina. “After Katrina, there was no eye care left in New Orleans,” he says. “People with diabetes went to their diabetes clinics and were told to go to their eye exams, but the wait time was more than four months and nobody went. Instead, they began losing vision and going blind. Nine months after introducing an AI for diabetic eye exams, the wait time for seeing an eye-care provider was the same day. We’re seeing similar effects from AI with the current COVID-19 situation. Patients are fearful of going to an additional appointment for their diabetic eye exam, but the exam can be done during their regular visit with AI.”

The Centers for Medicare and Medicaid announced in August that Medicare will pay for autonomous AI. The new CPT category 1 code for autonomous AI is 9225X.

IDx-DR

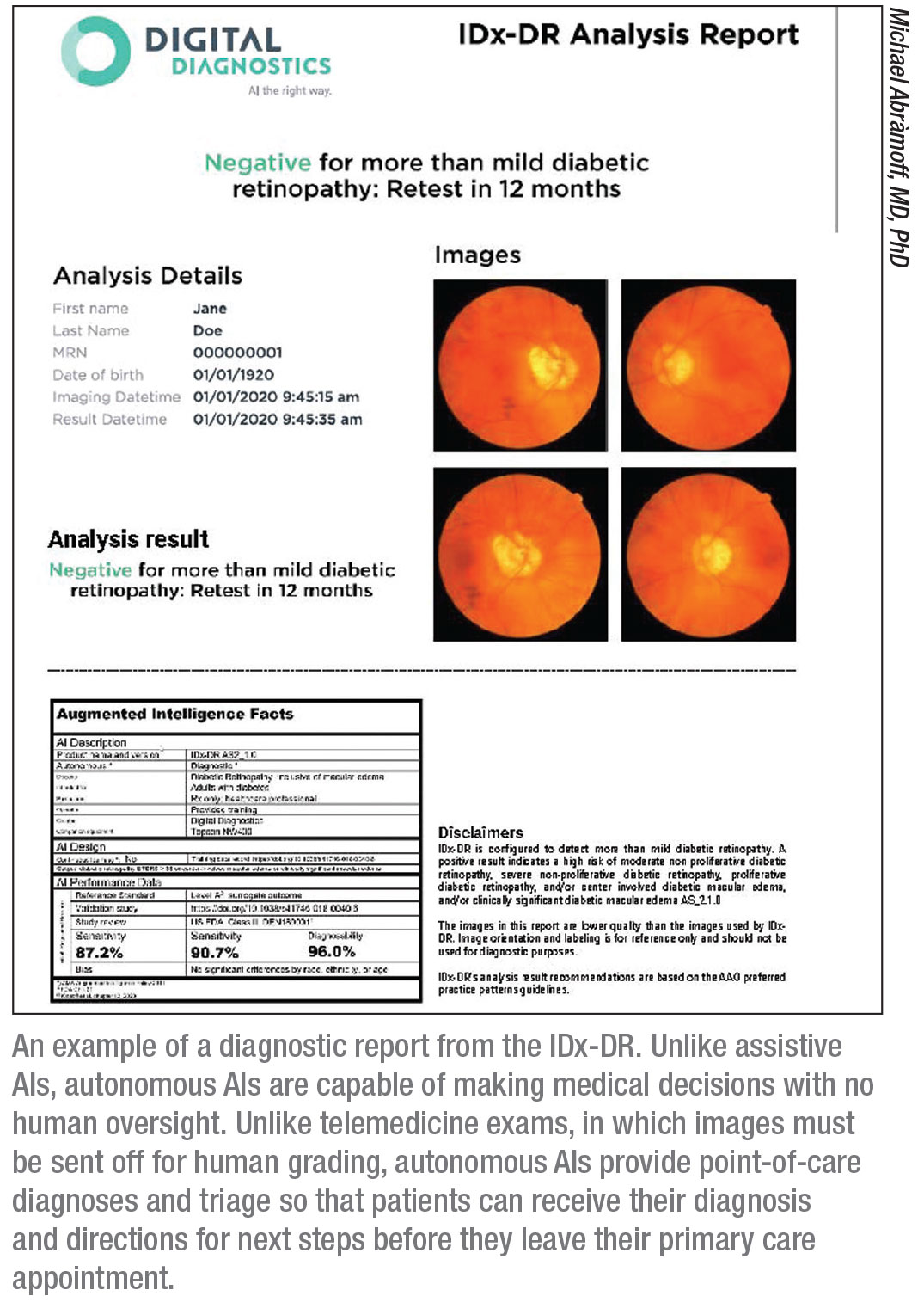

The IDx-DR (Digital Diagnostics, formerly IDx) is an autonomous AI designed to detect diabetic retinopathy and diabetic macular edema, and the first FDA-approved autonomous AI in any field of medicine.

|

Since its approval in 2018, this device has been in use across the country, from Stanford and Johns Hopkins to the Mayo Clinic and even in supermarkets in Delaware—and the list of locations keeps growing. The American Diabetes Association has also issued updates to its recommended standard-of-care practices to include autonomous AI for diabetic eye exams.

IDx-DR is indicated for adults over the age of 22 who haven’t been previously diagnosed with diabetic retinopathy. The AI system is compatible with the nonmydriatic Topcon TRC-NW400 digital fundus camera, but IDx-DR’s developer Dr. Abràmoff says this device isn’t for ophthalmologists or optometrists. “It’s primarily for primary care and designed to be used by an operator who’s only minimally trained,” he says. “IDx-DR fits easily into the workflow of primary care. Patients undergo their diabetic eye exams, and then by the time they’ve had blood drawn and their blood pressure and weight measured, the eye exam results are back, and the primary-care doctor can then go over them with the patient, as well as review all other aspects of diabetes.”

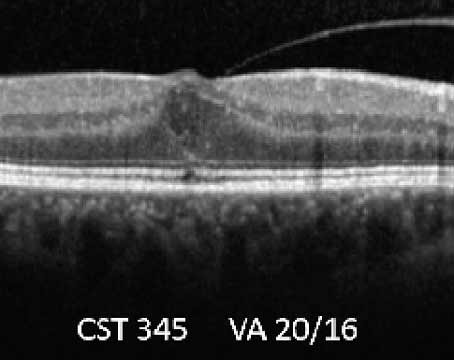

In the 2017 pivotal trial, IDx-DR was validated against clinical outcomes, including OCT, and demonstrated 87-percent sensitivity and 90-percent specificity for detecting more-than-mild diabetic retinopathy.3 “Most importantly, it met our ‘diagnosability’ endpoint,” Dr. Abràmoff says. “Diagnosability is your validated diagnostic result for a certain number of patients. A study might exclude a portion of participants if the system performed poorly on them and report good performance in a small subset of patients. But AI systems must work on the vast majority of people with diabetes in this country. If the AI performs well on only a small subset of patients, it’s not good enough. In IDx-DR’s case, diagnosability was 96 percent in a large, diverse population. The result is either positive or negative, so only 4 percent of the time did the AI say, ‘I don’t know.’ That’s very important.”

Dr. Abràmoff says the IDx-DR is invariant for sex, age and race because it’s designed around biomarkers in an attempt to mimic the thinking process of a human clinician. “We have different detectors for hemorrhages and for microaneurysms,” he says. “The AI analyzes the patient images and the detectors either fire or don’t fire. Then the outputs are combined.”

EyeArt

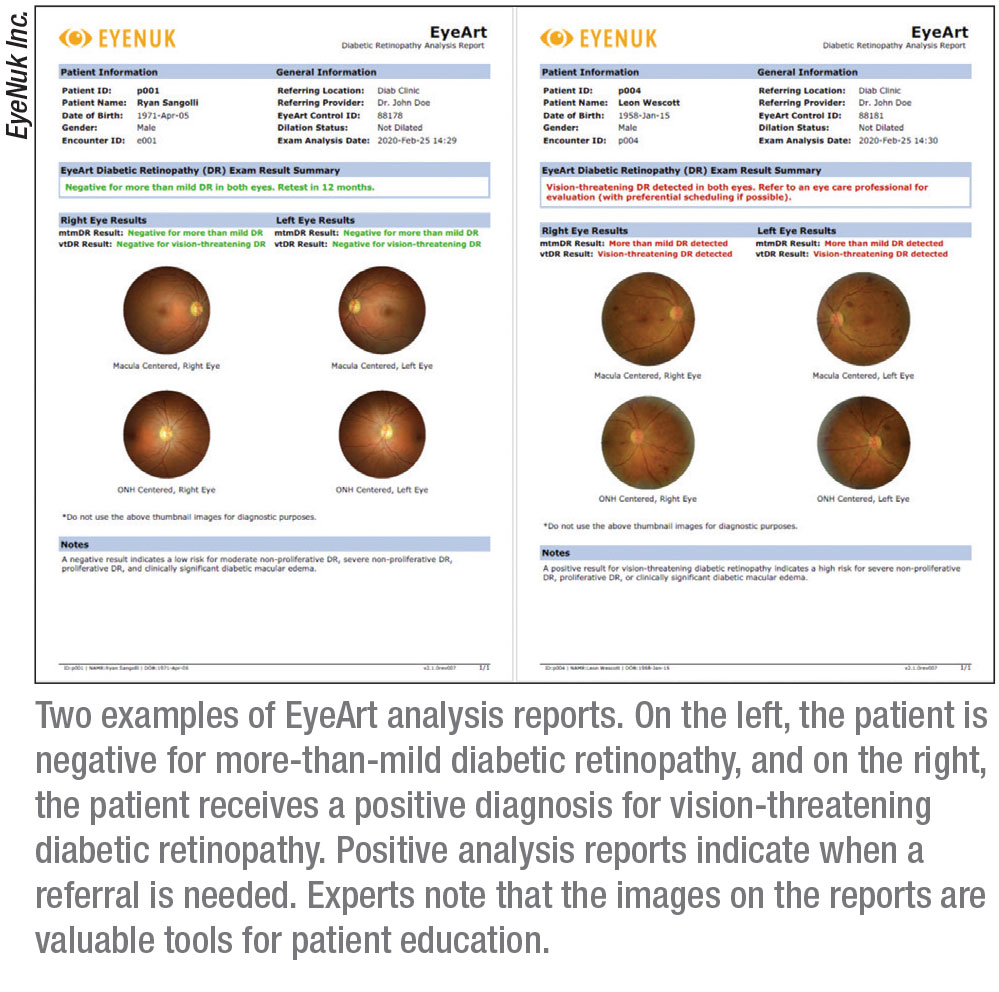

The autonomous AI system EyeArt (Eyenuk) received approval in August of this year for detecting

more-than-mild and vision-threatening DR in adults. It’s the first FDA-cleared autonomous AI technology that produces diagnostic outputs for each of the patient’s eyes.

“EyeArt is approved for screening patients who are diabetic and who don’t have any known retinopathy,” says Jennifer I. Lim, MD, the Marion H. Schenk Chair in ophthalmology and director of the retina service at the University of Illinois at Chicago, Illinois Eye & Ear Infirmary. “The patient has two nonmydriatic, 45-degree photographs taken of each eye. If the image isn’t good enough, the AI will indicate that you need to dilate, but for the most part dilation isn’t necessary. Before the patient leaves the office, the readout is generated, which tells the patient whether to come back in a year or to see a retinal specialist or eye-care provider for high-risk PDR or severe NPDR, for example.” EyeArt can also determine whether there is clinically significant macular edema in addition to diabetic retinopathy, says Dr. Lim.

|

EyeArt is currently compatible with two nonmydriatic retinal cameras, the Canon CR-2 AF and the Canon CR-2 Plus AF; it functions on a cloud-based system. “There’s no software to download or install,” Dr. Lim points out. “You just take the fundus photo and it’s sent up to the cloud, where the AI cloud-based software analyzes it. The result is sent back within a minute to the local computer. It’s much faster than a typical telemedicine reading of the image.”

In the clinical trial, EyeArt demonstrated 96-percent sensitivity and 88-percent specificity for detecting more-than-mild DR; for detecting vision-threatening DR, EyeArt showed 92-percent sensitivity and 94-percent specificity.

Another study (which was funded by the National Institutes of Health and undertaken both by employees of Eyenuk and independent researchers from the Doheny Eye Institute) tested EyeArt’s diagnostic efficacy retrospectively in a real-world setting, analyzing 850,908 fundus images of 101,710 consecutive patient visits from 404 primary care clinics.4 The researchers found that 75.7 percent of visits were nonreferable, 19.3 percent were referable to an eye-care specialist and in 5 percent the DR level was unknown, per the clinical reference standard.

In this case series, EyeArt demonstrated 91.3-percent sensitivity and 91.1-percent specificity. For the 5,446 patients with potentially treatable DR (more-than-moderate NPDR and/or diabetic macular edema) 5,363 received a positive “referral” output from the EyeArt system, showing a sensitivity of 98.5 percent. The researchers concluded that their study supports the value of AI screening in the real world as an easy-to-use, automated tool for endocrinologists, diabetologists and general practitioners for DR screening and monitoring.

The Physician vs. The Machine

Critics of AI screening systems are quick to point out the “tunnel vision” inherent in these devices. When patients visit the clinic, they’re screened by an ophthalmologist for a number of ocular diseases—cataracts, glaucoma, diabetes, tumors—but the two AI systems currently in use look for only the diseases they’re trained for. Some worry about what’s being left out.

Dr. Lim acknowledges the limited screening scope but believes that the alternative—not being screened at all—carries more serious consequences. And, she notes, these AI systems will occasionally identify non-diabetes-related conditions, albeit in a roundabout way.

|

AIs are not immune to type 1 and 2 errors and will sometimes misdiagnose patients, but even if the result is a false positive, Dr. Lim says the AI is likely picking up on pathology that needs further examination anyway. “If the AI sees something abnormal in the fundus images, it may read it as a false positive and that may trigger a normal DR diagnosis,” Dr. Lim explains. “In our pictures for the EyeArt system, there were a few cases of false positives, and for the most part the actual diagnoses were macular degeneration or choroidal nevus, or some other disease for which that person should be seen by an eye-care professional.

“The downside would be if you have a false negative,” she continues. “The rate of false negatives is very low, though, and in the event the system misses disease, it’s often still mild NPDR stages. The AI system did not miss high-risk PDR or very severe NPDR cases.”

In spite of the occasional false positives or negatives, Dr. Abràmoff says the machine’s accuracy can be impressive. “In fact, AI’s accuracy is higher than mine as a retina specialist,” Dr. Abràmoff says. “If you look at studies for sensitivity, it’s actually higher than in other studies that compare board-certified ophthalmologists to the same standard. Their sensitivity is about 30 to 40 percent, and for the AIs, its closer to 90 percent.”

|

This may sound a little frightening, but Dr. Lim reassures us that retinal specialists aren’t missing vital pathologies. “When retinal specialists look at an eye, they aren’t going to miss PDR for the most part,” she explains. “But they might under-grade, and in fact, that’s what we found when we did the EyeArt studies that compared physician DR grading of an eye to both the reading center and AI grading methods.

“Retinal specialists’ sensitivity for dilated ophthalmoscopy overall is 28 percent, compared to 96 percent with the EyeArt system,” Dr. Lim continues. “But retinal specialists’ specificity is higher—99.6 percent, versus 87 to 88 percent with the EyeArt system. That’s because if there’s some other disease present, we’ll diagnose what it is; we aren’t going to mistakenly diagnose it as DR.”

Dr. Lim says that when compared to general ophthalmologists, the retinal specialists were found to exhibit higher sensitivity—59.5 percent versus 20.7 percent—and comparable specificity at around 99 percent for detecting mild NPDR. The reason for this low sensitivity compared to the AI, Dr. Lim explains, is once again a tendency to under-grade.

“We might grade the DR as mild, but the machine and the reading center may grade them as moderate,” she says. “There’s a certain number of hemorrhages needed to cross a diagnosis threshold, and perhaps the specialists missed a few of these hemorrhages. In mild NPDR, you can only have a microaneurysm—you can’t have hemorrhages. For the most part these images were mild, and as I noted before, retinal specialists didn’t miss any cases of sight-threatening retinopathy. Some were missed by general ophthalmologists, but this number was very low, and only around 19 percent of their false negatives were more severe diabetic retinopathy. The bottom line is that AI performs quite well compared to reading centers and certainly—unfortunately for us—performs better than retinal specialists looking at an eyeball.”

As with any new technology, we often wonder about obsolescence. Will reliance on AI fundus image interpretation negatively impact the next generation of retinal specialists’ ability to read the same images? Will we even teach it to future students? Dr. Lim says that probably won’t be an issue. “We’re still going to be seeing patients with the pathology,” she says. “We aren’t an image-only type of discipline, and there will always be patients who come in because they can’t see or because they’ve been referred by the AI machine. So we’ll still need to take a look at the eye and know how to interpret what we see and make a diagnosis. The only difference will be that we won’t be seeing as many normal or very mild cases, because the AI will have screened out those less severe cases.”

In this way, Dr. Lim says that AI can help improve workflow. “With an AI system screening and redirecting those with DR, we can become more focused on the patients needing treatment, since only those with significant DR will be sent to us,” she says.

Tech-side Manner

Unlike the AIs envisioned by Hollywood or even IBM’s relatively amiable Jeopardy! champ Watson, these ophthalmic screening systems can’t offer the personal contact or counseling of a real ophthalmologist. “When I see a patient, I emphasize control of blood pressure, blood sugar, cholesterol, hemoglobin and A1c, and a machine is just not going to do that for you,” Dr. Lim says. She suggests that patient literature accompanying a diagnosis could have this information added to it. “I also really urge the AI system developers to include lifestyle advice, or to at least have the person taking the pictures emphasize it. If the screening is being done in a primary care setting, then hopefully the patient is getting the message from their primary care doctor.”

Best Practices

|

Autonomous AI systems have enormous potential for good in the medical field. However, Dr. Abràmoff points out that, at its core, AIs must be ethical in order to provide these benefits. In a paper published earlier this year in the American Journal of Ophthalmology, Dr. Abràmoff and his co-authors conducted a literature review of bioethical principles for AI and proposed a set of evaluation criteria. He notes that these evaluation rules can help physicians understand the benefits and limitations of autonomous AI for their patients.5

Here are the five key ethical principles he says AIs should fulfill:

• Improve patient outcomes.

• Be designed in alignment with human clinical cognition. “You need to understand how the AI works and arrives at a diagnosis,” Dr. Abràmoff says.

• Be accountable for the data. “Ensure patients understand what happens to the data, that the data isn’t sold or used for something else,” he says. “That’s a big fear among patients.”

• Be rigorously validated for safety, efficacy and equity. “People are worried about racial and ethnic bias, so in both the design and the validation, you need to minimize bias and essentially eliminate it,” he says.

• Have appropriate liabilities. Dr. Abràmoff and his team first proposed that liability ought to lie with the AI creator. “That’s now part of the American Medical Association’s policy, to make sure AI creators and companies are liable for the performance of their AIs,” he says.

“These are important principles and best practices for how to deal with AI,” Dr. Abràmoff says. “Validation in peer-reviewed literature, pre-registered trials and comparisons against the outcome are all key. Many AI studies you see compare AI to physicians. But the physicians themselves have never been validated against clinical outcomes. What patients care about is the effect—the clinical outcome. Does the patient see better or worse? We should be evaluating AIs based on how they perform against the outcome.”

Mitigating Bias in AI

Creating an ethical AI starts with the development process and the type of data on which you train it. “You need to design AI from the ground up to minimize bias,” says Dr. Abràmoff. “If you don’t, you can have all sorts of very weird associations.

“AIs trained only on large data sets are essentially association machines that learn to associate an image with a diagnosis, but without understanding at all what they do,” Dr. Abràmoff continues. “The AI knows about pixels in the image, numbers for each point in the image, and it associates the numbers with the diagnosis, but it doesn’t understand what a hemorrhage is and it doesn’t understand what an optic disc is, or a blood vessel, or a fovea. It only knows these pixel values and the diagnosis. And so if you ask, ‘Why did you decide this patient had diabetic retinopathy?’ it can’t tell you the patient had three hemorrhages and some exudates. It can tell you that some pixels were more important in terms of making a decision than others, which is what many people talk about when they do post hoc justification for these AIs.”

Dr. Abràmoff says for this reason having insufficient examples of all races or ethnicities in your training data set is the biggest problem. “The background color of the retina can vary greatly among different ethnicities,” he says. “An AI may be really good at associating images with the diagnoses you gave, but if you have a patient whose fundus image doesn’t resemble what’s in the training data, the AI won’t perform well.”

Having lots of examples in your training data isn’t enough to ensure a lack of bias, though—it’s impossible to account for every retinal variation. That’s why understanding how the AI reaches its decision is also key. When a clinician looks for biomarkers such as lesions or exudates, the color of the retina doesn’t matter, Dr. Abràmoff says. “If they have these lesions, then they have diabetic retinopathy. You either have lesions or you don’t.”

Both the IDx-DR and EyeArt algorithms detect lesions from diabetic retinopathy. AIs that can detect specific biomarkers and retinal pathology are more likely to be invariant for race, age and sex. A recent OCT-based algorithm in Korea trained on 12,247 OCT scans of South Korean patients seemed to appropriately focus on the differences within the macular area to extract features associated with wet AMD. The research team validated their findings in an ethnically diverse population in the United States of 91,509 OCT scans, and accuracy remained high. They noted, however, that their next hurdle is understanding how the algorithm classified and diagnosed disease.6

Another way AI developers insure against bias is careful training and regular evaluation of reading center graders. “The EyeArt system software was trained on hundreds of thousands of images from multiple large databases that were read by reading centers,” Dr. Lim says. “These reading center graders score the level of DR on a scale of one to 100 for DR, with levels of 35 or higher indicating more-than-mild DR. Images may be graded as anything in between—level 35, 45, 57 or 58, for example—and graders are compared both to each other and to their own previous work to provide checks and balances on their grading and ensure uniformity and adherence to standards. As a result of these grading standards, graders have become more adept and consistent in their evaluations of fundus images.” (For further reading on how bias influences AI algorithms, see the sidebar below.)

|

Standardizing AI

Dr. Lim is part of the Collaborative Communities on Ophthalmic Imaging, which was created by the FDA and Stanford University. “We’re bringing people together into teams for different diseases such as AMD, glaucoma, diabetes and ocular tumors to figure out AI standards and what we need to set up AI so that people can use it.” Dr. Lim is part of the AMD CCOI group.

Dr. Abràmoff heads up the Foundational Principles of Ophthalmic Imaging and Algorithmic Interpretation group, which includes entities such as the FDA, the Federal Trade Commission, Google, Microsoft, IBM, Apple and a host of bioethicists and workflow experts. “Our goal is to have a bioethical foundation for how we build, validate, test and deploy AI within hospital systems and without,” he says. “Many of the AIs out there now assist the doctor in making a decision, and the doctor is ultimately liable for that decision, but autonomous AIs make the decision themselves.” REVIEW

Dr. Lim has no related financial disclosures. Dr. Abràmoff is the founder and executive chairman of Digital Diagnostics. He has patents and patent applications related to IDx-DR and is an investor in the company.

1. Facts & Figures. International Diabetes Federation. Accessed September 29, 2020. http://idf.org/aboutdiabetes/what-is-diabetes/facts-figures.html.

2. Wolf RM, Channa R, Abràmoff MD, et al. Cost-effectiveness of autonomous point-of-care diabetic retinopathy screening for pediatric patients with diabetes. JAMA Ophthalmol. September 3, 2020. [Epub ahead of print].

3. Abràmoff MD, Lavin PT, Birch M, et al. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. Nature Digit Med 2018;1:39.

4. Bhaskaranand M, Ramachandra C, Bhat S, et al. The value of automated diabetic retinopathy screening with the EyeArt system: A study of more than 100,000 consecutive encounters from people with diabetes. Diabetes Technol Ther 2019;21:11:635-43.

5. Abràmoff MD, Tobey D and Char DS. Lessons learned about autonomous AI: Finding a safe, efficacious, and ethical path through the development process. Am J Ophthalmol 2020;214:134-42.

6. Rim TH, Lee AY, Ting DS, et al. Detection of features associated with neovascular age-related macular degeneration in ethnically distinct data sets by an optical coherence tomography: Trained deep learning algorithm. Br J Opthalmol. September 9, 2020. [Epub ahead of print].

7. Kloof DC, Schwartz DM. An economic analysis of interventions for diabetes. Diabetes Care 2000;23:3:390-404.