In recent years, technology for the structural and functional examination of the eye has proliferated; new tools include a number of imaging technologies and new forms of perimetry such as short-wavelength automated perimetry and frequency doubling technology. Having more ways to acquire data and detect damage is undoubtedly a good thing, but because clinicians in today’s world are pressed for time and resources, the availability of these new technologies has led to a gradual reduction in the use of more established tests such as white-on-white or standard automated perimetry. Unfortunately, while we may gain from the information provided by the new tests, using the more established ones less frequently may undermine our ability to detect and manage disease.

Here, I’d like to make the case that our patients will benefit more from increased use of SAP than from use of the alternate forms of perimetry.

Why Frequency Matters

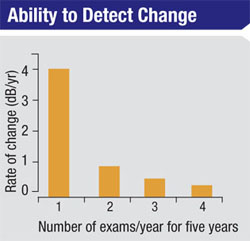

A key aspect of managing glaucoma is determining how quickly the disease is progressing, because this information (combined with other factors such as the patient’s age and stage of damage) will determine the type of management most likely to minimize the rate of visual loss with minimal impact on the patient’s quality of life. Determining the rate of progression requires performing multiple tests over time, and that’s where the problem arises: Slower rates of progression require more frequent tests to detect and confirm the rate of change.

We’ve conducted studies to determine the amount of perimetric testing required to obtain statistically reliable data. For example, we found that six SAP exams have to be performed during the first two years of testing a patient with definite glaucomatous visual field loss in whom we have no prior data in order to detect a rate of progression of about 2 db per year. That rate of progression is sufficient to threaten a patient’s vision over time, but it’s not easily detected with fewer than six exams during that two-year period.

|

Unfortunately, glaucoma patients are already receiving a disturbingly small number of examinations and visual field tests. One study published in 2007 used data from a popular health maintenance organization to find out which patients were receiving medication for glaucoma and how many exams those patients were receiving each year.1 The data was quite staggering. It showed that 61 percent of patients who used a medication for glaucoma received less than one exam per year. We did a similar survey in Canada recently, with slightly better results; we found that the average number of exams in patients who have visual field loss for glaucoma was somewhere between one and two exams per year. Either way, in my view, this represents an inadequate number of examinations for the majority of patients.

Furthermore, the number of visual field exams given to these patients has been dropping. One recent paper reviewed data on more than a half-million glaucoma suspects and patients diagnosed with open-angle glaucoma between 2001 and 2009, seen by either optometrists or ophthalmologists.2 The data showed that for individuals with open-angle glaucoma, the odds of undergoing visual field testing decreased by 36 percent from 2001 to 2005, and another 12 percent between 2005 and 2009 (a 44-percent total decline over the entire period). Furthermore, among those seen exclusively by ophthalmologists, the probability of visual field testing at a given exam decreased from 65 percent in 2001 to 51 percent in 2009.

Given that we’re already making key treatment decisions on the basis of statistically inadequate data, this is a very disturbing trend.

Are the Advantages Real?

It certainly seems reasonable that a test other than SAP might detect glaucomatous damage earlier. It’s widely accepted that an individual can lose a lot of ganglion cells before functional damage becomes evident, so if a test can detect very specific types of damage it might detect a problem earlier. In addition, most ophthalmologists believe that SAP is simply not a very sensitive test, and that’s probably true.

|

It’s clear that many physicians—especially glaucoma specialists—are now using SWAP and FDT for some patients. The switch to these types of perimetry, at least for detection of disease, is usually justified by citing published evidence that these tests may detect glaucoma sooner. The problem with that line of evidence is that in most of those studies the data has been influenced negatively by sampling bias.

Typically, these studies are done in patients who are glaucoma suspects. In order to determine whether SWAP or FDT can detect damage missed by SAP, the inclusion criteria for patients is that they must have a normal SAP visual field; patients who have an abnormal SAP field are excluded. However, the criteria for patient selection means that these studies never look at the other side of the equation: How many of the subjects would have had a normal SWAP or FDT field but an abnormal SAP field? In other words, how many subjects with damage would be detected by SAP but missed by SWAP and FDT? That question isn’t being addressed, which leads to an inherent sampling bias. By excluding the patients who’ve already produced abnormal SAP results, it shouldn’t be surprising that we’d be left with the impression that SWAP detects glaucoma earlier.

To me, a more sensible way to compare the sensitivity of the tests would be to track patients who already have glaucoma and field damage, over time, to see whether one technique can pick up progression more quickly than the other. A few such studies have been done, and the evidence for the superiority of SWAP or FDT has not been very persuasive. For example, one study of 500 eyes of 250 patients with ocular hypertension found that although the prevalence of SWAP defects was higher in this cohort, the incidence of SWAP and SAP defects was the same.3 This can be wholly explained by the sampling bias I mention above.

In fact, several recent papers suggest that SWAP and FDT are not superior to SAP.4,5 It appears that the result of such comparisons depends largely on the population being studied and the study design.

Given these potential flaws in study design, I believe the evidence that SWAP or FDT are better tools for diagnosing glaucoma or monitoring progression is not that persuasive—at least, not persuasive enough to justify substituting SWAP or FDT for SAP, given the limited time clinicians have to conduct these tests.

Furthermore, SAP has a few advantages over the alternatives. One of those advantages is an extensive comparative database of existing data that’s used to generate probabilities regarding whether a given outcome is likely to be normal or indicative of damage. Those databases are constantly updated as new data is generated, and the latest data is usually installed when you purchase or receive a new programmable read-only memory chip.

Unfortunately, it’s impossible to do a direct comparison between the size of SAP databases and SWAP or FDT databases, because much of their content is proprietary. However, I think it’s safe to say that the existing databases for SAP are more extensive, simply because of the volume of testing that’s been done. There are many more SAP machines out there, and ophthalmologists have much more experience with that test.

Even with that advantage, if an alternative test were to demonstrate clear, significant superiority to SAP—if it were 10 or 15 percent more sensitive, for example—that might justify a clinician considering replacing SAP. But to date, even that level of superiority has not been convincingly demonstrated.

Detection vs. Progression

Some might make the argument that while more SAP tests are arguably better for following progression, the alternative visual field tests are mostly useful for early detection of glaucoma. That would theoretically justify their use in glaucoma suspects. However, I believe the distinction between detection of the disease and detection of progression is arbitrary. We simply decide the point at which a patient “has” clinical disease. Biologically speaking, there isn’t a switch that goes on and says, “Now you’ve gone from being a glaucoma suspect to a glaucoma patient.” It’s a sliding scale.

So, if a patient has elevated IOP and a suspicious optic disc, you’re going to have to follow the patient over time to decide whether there is change that’s sufficient to warrant the diagnosis of manifest glaucoma. Obviously, in some cases a patient comes in and your diagnosis is cut-and-dried; the patient has disc damage and visual field damage, therefore the diagnosis is straightforward. Now you have to follow the patient over time to see how fast this patient is going to progress. But when the patient’s condition is less obvious, I don’t think the strategy should be different. Glaucoma is a progressive disease, and you want to know how fast the disease is progressing.

Basically, the more devices you use to follow a patient, the less continuity you have. You need to get lots of data from a single source over a long period of time to really know what’s going on and decide whether the patient is progressing rapidly or slowly. Whatever theoretical advantages come with using SWAP or FDT, using them instead of SAP eliminates one piece of data that could help provide a more accurate picture of the patient’s trajectory.

Structure vs. Function

It’s clear that another factor associated with the decline in visual field exams is the increasing use of imaging devices. The study mentioned earlier found that while visual field exams decreased 44 percent between 2001 and 2009, the number of imaging exams increased by 147 percent.2 It’s unfortunate to think that one type of testing may be increasing at the expense of the other, but that is likely to be the case. And the reality is that there have been huge advances in imaging technology to date, and this trend is likely to continue in the future. (Unfortunately, this study didn’t evaluate the different types of visual fields being performed.)

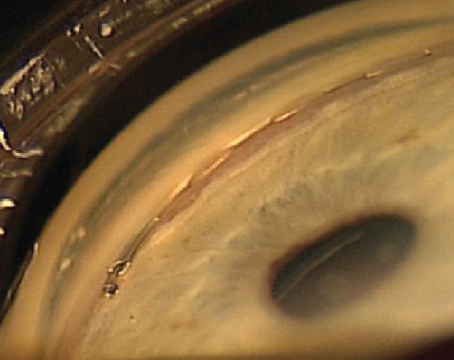

|

Making the Most of Limited Time

I don’t mean to suggest that the alternate perimetry tests are without value. I can imagine circumstances in which it might make sense to use more than one test. Suppose you have a patient in whom you absolutely have to be sure whether or not damage is present. Perhaps every single member of this patient’s family had glaucoma; or this patient has a very high risk of progressing very fast, be-cause the patient is black, myopic, very young and has high IOP. Under these circumstances you might want the reassurance of every possible bit of evidence regarding his condition. Furthermore, the situation would be different if we worked in a world in which we had unlimited resources and our patients had no problem doing any of these tests. In that world it would make sense to do them all, and do them frequently.

Unfortunately, we have to be selective about the tests we perform. Also, the vast majority of patients most clinicians see are relatively old, so the need to assemble all possible evidence is likely to be a rare exception. Of course, there’s nothing wrong with using these tests in research settings or other situations. But for the general ophthalmologist or specialist who wants to track glaucoma in a given patient, I believe it makes very little sense to jeopardize the frequency of SAP examinations in order to use the other tests.

An abnormal SAP is very persuasive evidence that a patient has glaucoma, and once that’s established, the benefit of doing something like SWAP or FDT is questionable. By spending our limited time doing SAP instead, we increase our ability to accurately analyze the patient’s condition and choose the most beneficial management protocol. REVIEW

Dr. Chauhan is a professor, research director and chair in vision research at the Department of Ophthalmology and Visual Sciences at Dalhousie University in Halifax. He leads the Canadian Glaucoma Study, the largest clinical study of glaucoma conducted in Canada. He has no financial interest in any of the technologies discussed in the article.

1. Quigley HA, Friedman DS, Hahn SR. Evaluation of practice patterns for the care of open-angle glaucoma compared with claims data: the Glaucoma Adherence and Persistency Study. Ophthalmol 2007;114:9:1599-606.

2. Stein JD, Talwar N, LaVerne AM, et al. Trends in use of ancillary glaucoma tests for patients with open-angle glaucoma from 2001 to 2009. Ophthalmol 2012; Jan. 4 [Epub ahead of print].

3. Demirel S, Johnson CA. Incidence and prevalence of short wavelength automated perimetry deficits in ocular hypertensive patients. Am J Ophthalmol 2001;131:6:709-15.

4. van der Schoot J, Reus NJ, Colen TP, Lemij HG. The ability of short-wavelength automated perimetry to predict conversion to glaucoma. Ophthalmol 2010;117:1:30-4.

5. Haymes SA, Hutchison DM, McCormick TA, Varma DK, Nicolela MT, LeBlanc RP, Chauhan BC. Glaucomatous Visual Field Progression with Frequency-Doubling Technology and Standard Automated Perimetry in a Longitudinal Prospective Study. Invest Ophthalmol Vis Sci 2005;46:547–554.

6. Chauhan BC, Garway-Heath DF, Goñi FJ, Rossetti L, Bengtsson B, Viswanathan AC, Heijl A. Practical recommendations for measuring rates of visual field change in glaucoma. Br J Ophthalmol 2008;92:4:569-73.